Learning the OWASP Top 10 feels like a major milestone for most beginners in cybersecurity. By the time someone finishes tutorials, labs, and explanations around these vulnerabilities, there is a strong sense of readiness. You understand the terminology, recognize common attack types, and feel equipped to start hunting on real bug bounty programs.

That confidence often disappears quickly.

When beginners open a real application, they usually do not see anything resembling what they practiced. Inputs behave normally. Errors are hidden. Pages respond exactly as expected. After some time, frustration starts building, and a common question appears.

If I know OWASP Top 10, why can’t I find any bugs?

For a clear understanding of what OWASP actually covers, you can first read

OWASP Top 10 explained in simple language.

The answer is not a lack of effort or intelligence. The problem is that OWASP Top 10 teaches awareness, not discovery.

OWASP Top 10 Explains Risk, Not How Bugs Actually Appear

OWASP Top 10 is primarily a risk awareness document. It exists to help organizations, developers, and security teams understand which categories of vulnerabilities cause the most damage and deserve the most attention. It creates a shared language so teams can discuss security issues consistently.

What it does not do is teach how vulnerabilities are introduced in real applications.

Beginners often treat OWASP Top 10 like a checklist. They assume that if they understand each item well enough, vulnerabilities will reveal themselves naturally. In reality, modern applications rarely fail in clean or isolated ways. They fail quietly, partially, or only under very specific conditions.

OWASP Top 10 tells you what can go wrong. Bug bounty requires learning how things go wrong when systems become complex, rushed, or poorly understood. This difference becomes clearer once you understand.

For official reference, OWASP maintains the document here:

https://owasp.org/www-project-top-ten/

Knowing Vulnerability Names Does Not Equal Finding Vulnerabilities

A common beginner habit is thinking in labels. While testing, the internal dialogue often sounds like this: “This looks like XSS,” or “Maybe this endpoint is vulnerable to IDOR.” This approach assumes vulnerabilities are visible objects that can be matched with the correct name.

That assumption is incorrect.

Real vulnerabilities are rarely obvious. They are side effects of behavior. They happen because the server trusts something it should not, because authorization is checked inconsistently, or because developers assumed users would behave in predictable ways.

Experienced hunters rarely begin by identifying vulnerability categories. Instead, they observe how the application behaves when something unexpected happens. Only after noticing strange or inconsistent behavior do they classify it as a known vulnerability type.

Beginners usually try to apply the classification first, which is why they miss subtle issues.

Labs Are Helpful, but They Set the Wrong Expectations

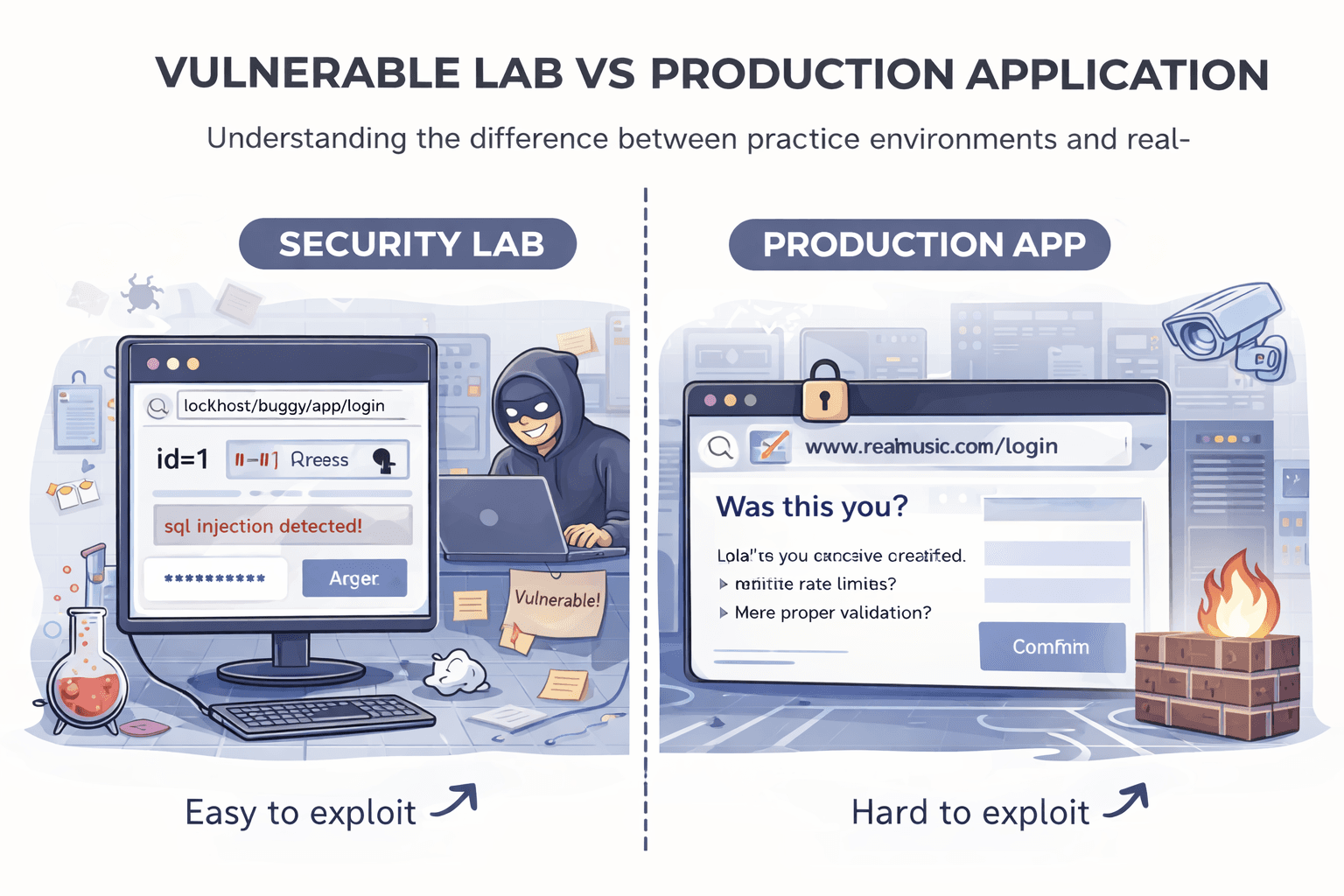

Practice labs play an important role in learning fundamentals. They introduce vulnerability concepts in a controlled environment and build confidence. However, labs are designed to be solved, and that design choice creates unrealistic expectations.

Labs contain deliberate weaknesses, clear entry points, and obvious feedback. When you inject something, the application responds in a way that confirms success or failure.

Production systems are designed to do the opposite. Errors are hidden, responses are normalized, and unexpected behavior is often suppressed. When beginners move from labs to live targets, they expect similar signals. When those signals do not appear, they assume the application is secure.

In most cases, the vulnerability is still there. It is simply harder to observe. This gap is also why many learners feel confused after finishing labs or CTFs.

Beginners Trust the User Interface Too Much

Another major reason beginners struggle is excessive trust in the user interface. They follow the intended flow, respect disabled buttons, and assume steps cannot be skipped. This behavior mirrors how normal users interact with applications.

Attackers do not behave like normal users.

Most serious vulnerabilities exist outside the UI. They appear when requests are replayed, parameters are modified, or sequences are altered. The interface is only a suggestion of how the application expects users to behave.

Experienced hunters focus on requests, state changes, and server-side behavior. Beginners stay at the surface and miss what actually matters. Many of these issues become visible only when inspecting requests.

Real Vulnerabilities Exist Because of Assumptions

Modern frameworks have reduced many classic injection flaws, but they have not eliminated human assumption. Developers assume users will follow the correct order, respect limits, and interact with features in predictable ways.

These assumptions are where real bugs live.

Beginners rarely challenge them. They test what is visible instead of what is implied. Experienced hunters intentionally try to break the mental model developers had when building the feature.

That difference in mindset explains why two people can test the same application and get completely different results.

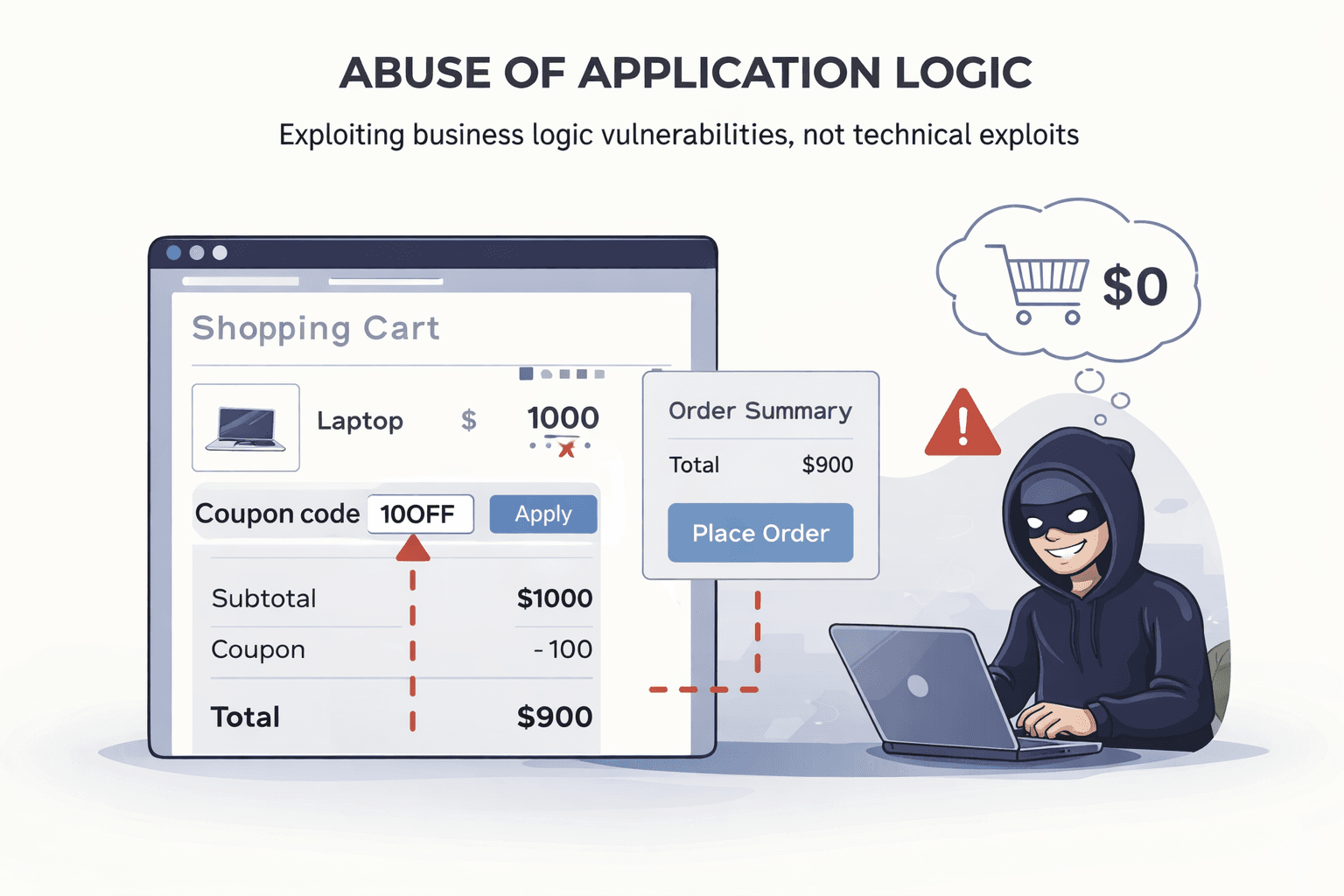

OWASP Top 10 Does Not Teach Business Logic Thinking

Business logic flaws are among the most common real-world vulnerabilities, yet they are also the hardest for beginners to recognize. OWASP Top 10 mentions broken access control, but it does not teach how business rules fail in practice.

It does not explain how workflows can be abused, limits bypassed, or state transitions manipulated in subtle ways. These issues rarely have payloads or immediate proof, which makes them uncomfortable for beginners to explore.

Modern applications are far more likely to break at the decision level than at the input validation level. Many such flaws start as small oversights.

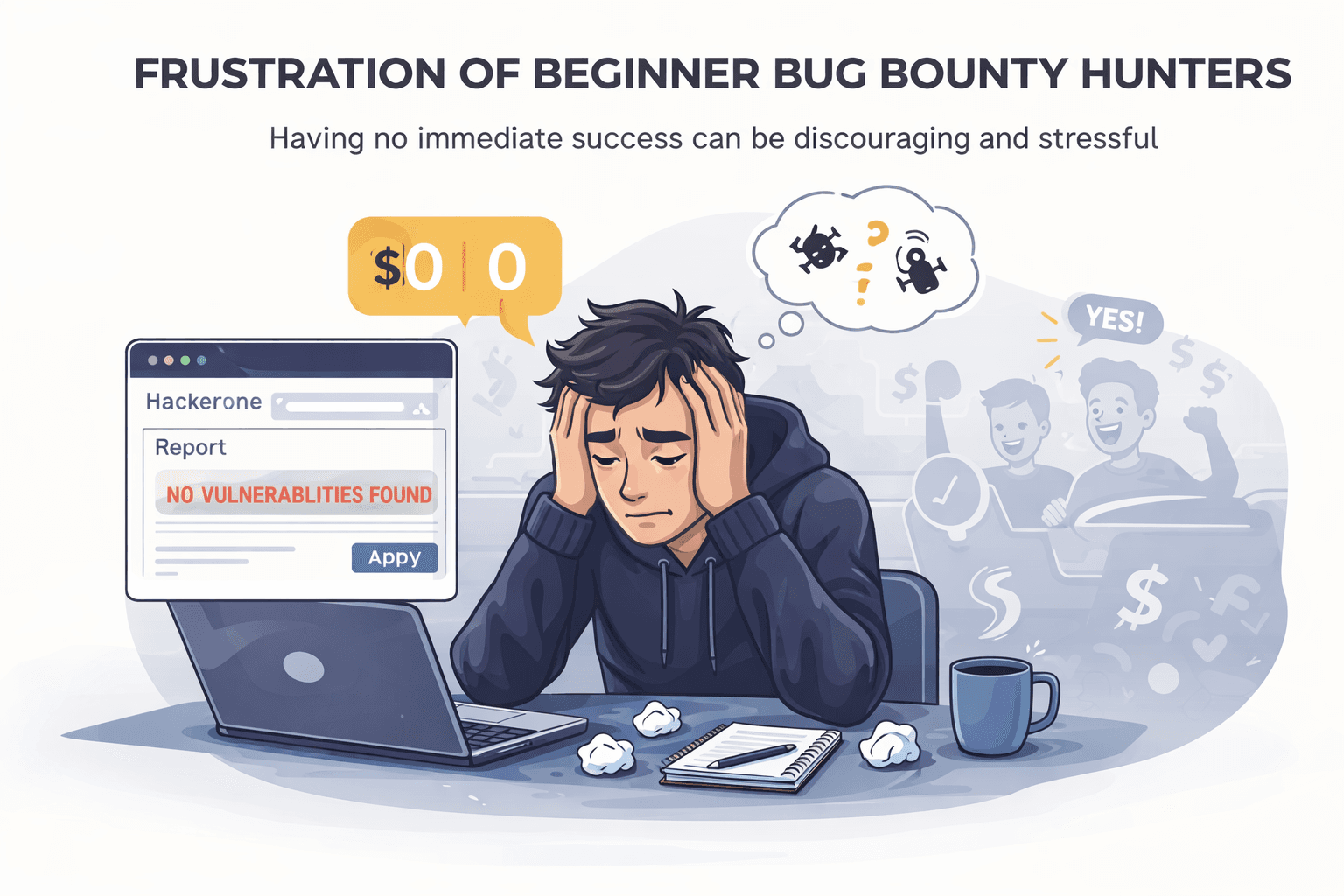

Expectation and Patience Matter More Than Most Beginners Realize

Many beginners believe that every testing session should produce something tangible. When no alert, error, or exploit appears, they assume they are doing something wrong. This expectation leads to rushed testing, frequent target switching, and eventual burnout.

Real bug hunting is slow. Most sessions end with partial understanding, notes, and unanswered questions. Experienced hunters treat this as progress. Beginners often interpret it as failure.

OWASP Top 10 does not prepare you mentally for this reality.

The Skill OWASP Top 10 Does Not Teach

What beginners truly lack is not more vulnerability knowledge. It is the ability to build a mental model of how an application works.

A mental model helps answer questions like where authorization happens, how identity is tracked, which actions change server state, and what the backend implicitly trusts. Without this model, OWASP Top 10 remains abstract theory.

Experienced hunters continuously refine this model as they test. Beginners rarely do this intentionally. Developing this mindset is closely tied to consistent habits.

Why Learning More Often Slows Progress

When beginners fail to find bugs, the natural reaction is to learn more. More courses, more tools, and more vulnerability types feel productive, but they often create overload rather than clarity.

What actually helps is slowing down and testing fewer applications more deeply. Spending time understanding one system teaches far more than scanning many targets superficially.

OWASP Top 10 is not a checklist to complete. It is a vocabulary that becomes useful only when paired with observation.

Final Thought

If you have learned OWASP Top 10 and still feel stuck, you are not behind. You are standing at the point where theory must turn into observation.

Real bug bounty progress begins when you stop searching for vulnerability names and start understanding how applications truly work. OWASP Top 10 gives you the map, but maps alone do not make you a navigator.

Learning to read the terrain, question assumptions, and stay with confusing behavior is where real skill is built.